[ad_1]

LLMs have enabled us to process large amounts of text data very efficiently, and in a reliable and fast manner. One of the most popular use cases that has emerged over the past two years is Retrieval-Augmented Generation (RAG).

RAG allows us to take a number of documents (from a couple to even a hundred thousand), create a knowledge database with the documents, and then query it and receive answers with relevant sources based on the documents.

Instead of having to manually search which would take hours or even days, we can get an LLM to search for us with just a few seconds of latency.

Cloud-based vs Local

There are two parts to making a RAG system work: the knowledge database, and the LLM. Think of the former as a library and the latter as a very efficient library clerk.

The first design decision when creating such a system is whether you’ll want to host it in the cloud, or locally. Local deployments have a cost advantage at scale and also help safeguard your privacy. On the other hand, the cloud can offer low startup costs and little to no maintenance.

For the sake of clearly demonstrating the concepts around RAG, we’ll opt for a cloud deployment during this guide, but will also be leaving notes on going local at the end.

The knowledge (vector) database

So the first thing we need to do is create a knowledge database (techinically called a vector database). The way this is done is by running the documents through an embedding model that will create a vector out of each one. The embedding models are very good at understanding text and the vectors generated will have similar documents closer together in the vector space.

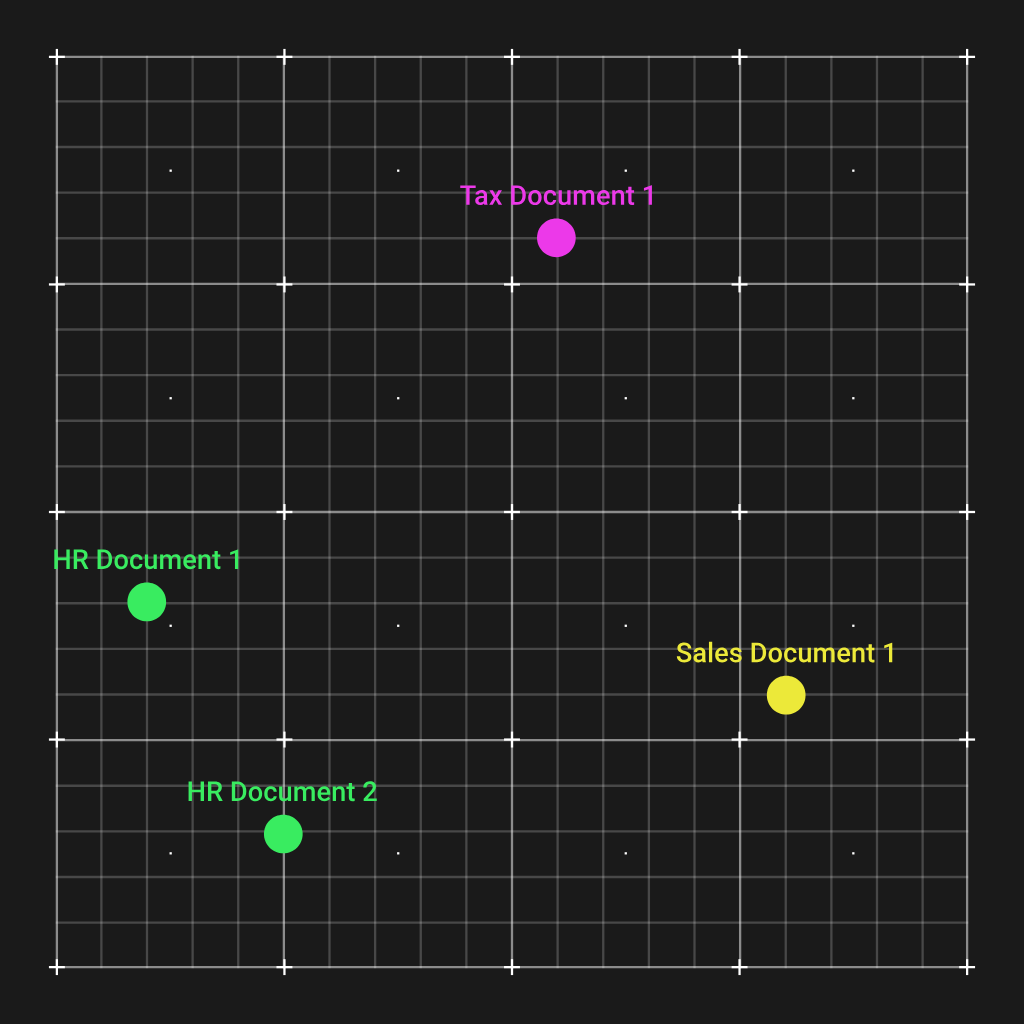

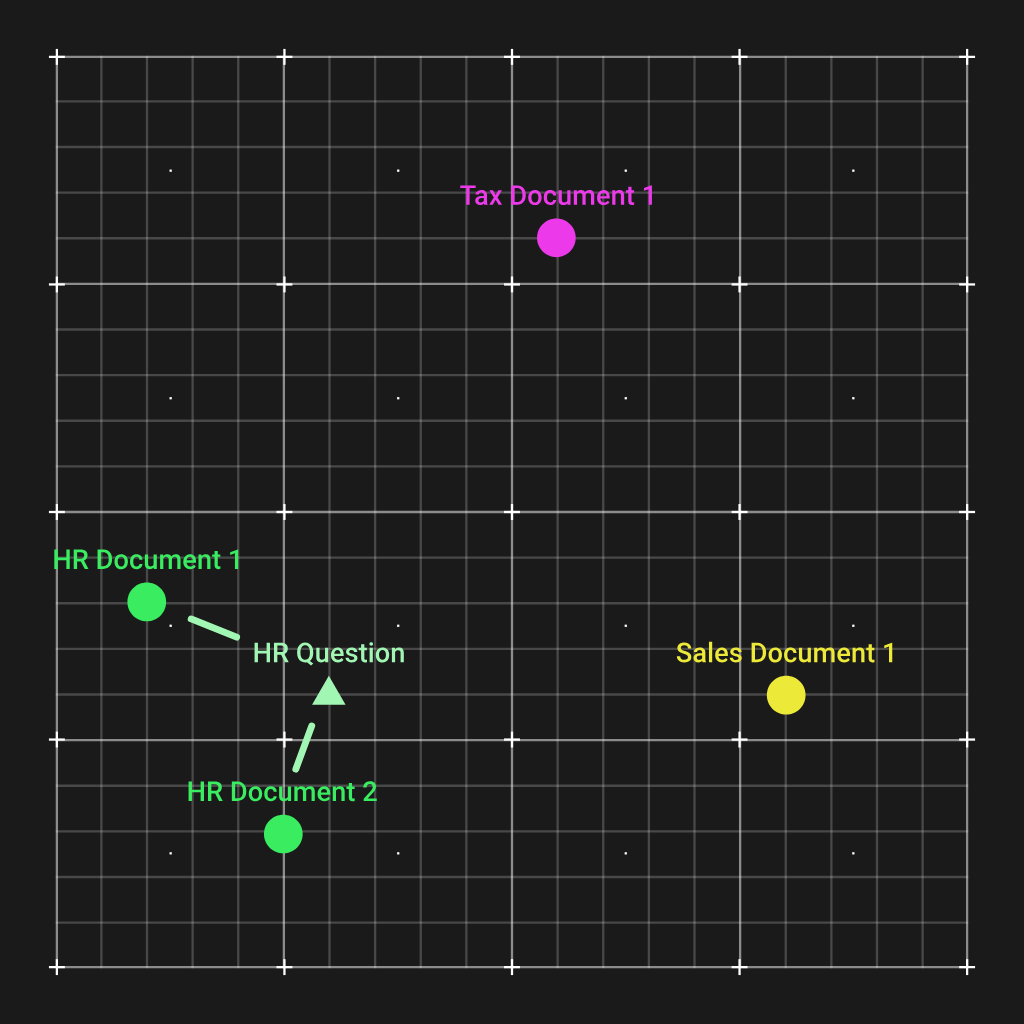

This is incredibly convenient, and we can illustrate it by plotting the vectors of four documents of a hypothetical organization in a 2D vector space:

As you see, the two HR documents were grouped together, and are far from the other types of documents. Now, the way this helps us is that when we get a question regarding HR, we can calculate an embeddings vector for that question, which will also end up close to the two HR documents.

And by a simple Euclidian distance calculation, we can match the most relevant documents to give to the LLM so it can answer the question.

There are is a vast array of embedding algorithms to choose from which are all compared on the MTEB leaderboard. An interesting fact here is that a lot of open-source models are taking the lead compared to proprietary providers like OpenAI.

Besides the overall score, two more columns to take into account on that leaderboard are the model size, and the max tokens of each model.

The model size will determine how much V(RAM) will be needed to load the model in memory as well as how fast embedding computations will be. Each model can only embed a certain amount of tokens, so very large files might need to be split before being embedded.

Lastly, the models can only embed text, so any PDFs will need to be converted, and rich elements like images should be either captioned (using an AI image caption model) or discarded.

The open-source local embedding models can be ran locally using transformers. For the OpenAI embedding model, you’ll need an OpenAI API key instead.

Here is Python code to create embeddings using the OpenAI API and a simple pickle file-system-based vector database:

import os

from openai import OpenAI

import pickle

openai = OpenAI(

api_key="your_openai_api_key"

)

directory = "doc1"

embeddings_store = {}

def embed_text(text):

"""Embed text using OpenAI embeddings."""

response = openai.embeddings.create(

input=text,

model="text-embedding-3-large"

)

return response.data[0].embedding

def process_and_store_files(directory):

"""Process .txt files, embed them, and store in-memory."""

for filename in os.listdir(directory):

if filename.endswith(".txt"):

file_path = os.path.join(directory, filename)

with open(file_path, 'r', encoding='utf-8') as file:

content = file.read()

embedding = embed_text(content)

embeddings_store[filename] = embedding

print(f"Stored embedding for {filename}")

def save_embeddings_to_file(file_path):

"""Save the embeddings dictionary to a file."""

with open(file_path, 'wb') as f:

pickle.dump(embeddings_store, f)

print(f"Embeddings saved to {file_path}")

def load_embeddings_from_file(file_path):

"""Load embeddings dictionary from a file."""

with open(file_path, 'rb') as f:

embeddings_store = pickle.load(f)

print(f"Embeddings loaded from {file_path}")

return embeddings_store

process_and_store_files(directory)

save_embeddings_to_file("embeddings_store.pkl")

LLM

Now that we have the documents stored in the database, let’s create a function to get the top 3 most relevant documents based on a query:

import numpy as np

def get_top_k_relevant(query, embeddings_store, top_k=3):

"""

Given a query string and a dictionary of document embeddings,

return the top_k documents most relevant (lowest Euclidean distance).

"""

query_embedding = embed_text(query)

distances = []

for doc_id, doc_embedding in embeddings_store.items():

dist = np.linalg.norm(np.array(query_embedding) - np.array(doc_embedding))

distances.append((doc_id, dist))

distances.sort(key=lambda x: x[1])

return distances[:top_k]

And now that we have the documents comes the simple part, which is prompting our LLM, GPT-4o in this case, to give an answer based on them:

from openai import OpenAI

openai = OpenAI(

api_key="your_openai_api_key"

)

def answer_query_with_context(query, doc_store, embeddings_store, top_k=3):

"""

Given a query, find the top_k most relevant documents and prompt GPT-4o

to answer the query using those documents as context.

"""

best_matches = get_top_k_relevant(query, embeddings_store, top_k)

context = ""

for doc_id, distance in best_matches:

doc_content = doc_store.get(doc_id, "")

context += f"--- Document: {doc_id} (Distance: {distance:.4f}) ---\n{doc_content}\n\n"

completion = openai.chat.completions.create(

model="gpt-4o",

messages=[

{

"role": "system",

"content": (

"You are a helpful assistant. Use the provided context to answer the user’s query. "

"If the answer isn't in the provided context, say you don't have enough information."

)

},

{

"role": "user",

"content": (

f"Context:\n{context}\n"

f"Question:\n{query}\n\n"

"Please provide a concise, accurate answer based on the above documents."

)

}

],

temperature=0.7

)

answer = completion.choices[0].message.content

return answer

Conclusion

There you have it! This is an intuitive implementation of RAG with a lot of room for improvement. Here are some ideas on where to go next:

[ad_2]

Source link